Although I built CERNLib a long time ago, apart from running PAW and looking at some old Ntuples I didn't get round to building my old ZEUS analysis code.

Setup the Environment

CERNLib is available as a package on Ubuntu. However

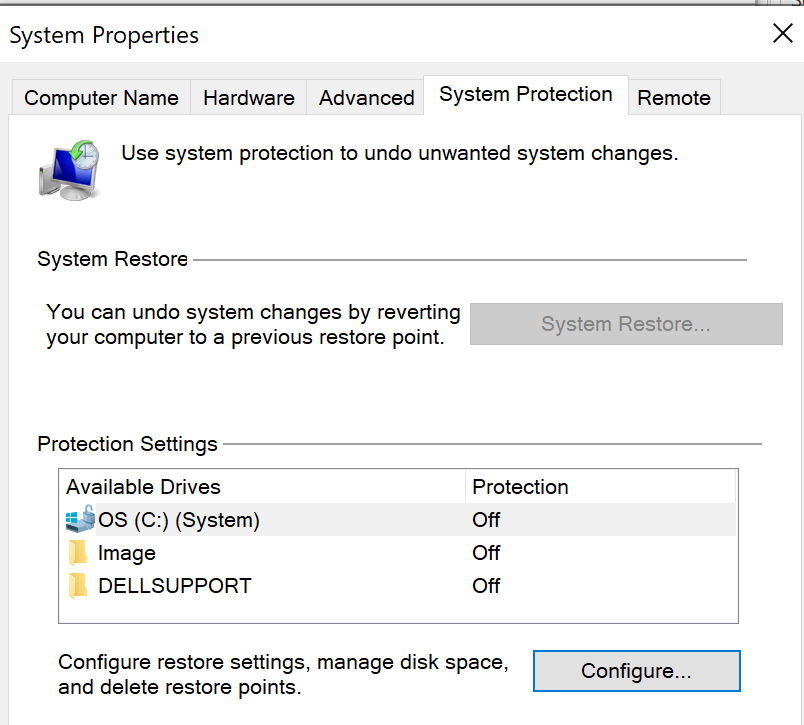

I've seen issues around running this on 64bit Linux. So for practicality I decided to setup a 32bit Linux VM. As noted before I opted for Linux Mint on VirtualBox. I picked the 32bit version of Mint 19.3 Tricia.

Code Changes

So the Fortran compiler has moved on a bit since October 2000 which was the last time I ran this code. I needed to make a few changes to successfully compile my source. Fortunately nothing too significant was required.

.EQ. to .EQV.

I was using .EQ. for logical comparison of a LOGICAL variable, for example:

IF (Q2WTFIRST.EQ..TRUE.) THEN

However this should be .EQV. :

IF (Q2WTFIRST.EQV..TRUE.) THEN

Using 1 and 0 for True and False

Related to the above, a compiler error was thrown when using .eq. and 1 or 0 to represent true or false

tltcut = 0

... Change tltcut to 1 if the condition is met

if (tltcut.eq.1) then

Fix was to change the condition to:

if (tltcut) then

DFLIB

At the time Bristol University were trying out Visual Fortran (DEC now Intel Visual Fortran) . We used this library in a Math error handler routine. DFLIB has quite a history but the upshot is it's only supported on Windows. I just took out the routine using it!

Makefile

Using Visual Fortran the build and linker was controlled by a. To build using gfortran in Linux I had to create a Makefile. This is an art in itself! The main issue I had to overcome was linking the CERNLIB libraries. The required libraries below, mathlib, packlib and kernlib were available to the linker with the standard path. pdflib however was in a separate folder that needed including with the -L flag. Some looking around to find the correct name was required, in the end I needed pdflib804.

-L /usr/lib/i386-linux-gnu -lmathlib -lpacklib -lkernlib -lpdflib804

.inc Files

Include files in Fortran are inserted into the source program. This is useful if you have a common block that you don't want to write over and over, for example defining variables.

This was referenced in the source files with :

#include "common.inc"

Incidentally, the # indicated the file was processed by a C preprocessor rather than the standard Fortran include.

My inc files were mixed in with the Fortran source files. The compiler didn't like this so I updated the folder structure to use separate src/inc/exe folders. Funnily enough this reflected the original folder structure when I ran the code on Unix boxes before we switched to Visual Fortran. The circle is complete!

How to run the Analysis code

This took a bit of remembering. The code was driven by a number of input files.

- Steering cards. This contains a list of cuts made to select the events. Different cards would define different cuts and therefore allow me to analyse systematic errors

- File listing input Data .rz ntuples

- File listing input Background .rz ntuples

- File listing input MonteCarlo .rz ntuples

Of course, naming convention wasn't particularly useful, for a run on 1997 data there would be:

- stc97_n for the steering cards

- fort.45 for the Data file list

- fort.46 for the Background file list

- fort.47 for the MonteCarlo file list

I should make clear at this point that the files I'm running against are not the "raw" ZEUS datafiles. A preliminary job was run against the full set of data on tape to load a cutdown set of data that passed some loose cuts and create an ntuple with the necessary fields for this particular analysis. My ntuples were saved after this first step.

Results

I ran the code against a single 1997 data, background and MonteCarlo ntuple.

This created, amongst some other files, an israll97.hbook file. By opening PAW and entering hi/file 1 israll.hbook I was able to load this. hi/li listed the available histograms, this looked about right and when I opened one of them with hi/pl 105 success! I had created a histogram of the E-Pz (Total Energy - Momentum in the z, or beampipe direction) from my saved data files. I used this originally to help select the population of events to use in my analysis.

I've put the code up on GitHub:

The README.md describes the list of input and output files.

Next steps...

- Run the PAW macro (kumac) files I used after this analysis step and add to GitHub.

- Run against the full data set. I want to see how quickly this runs on current hardware. To run the full 96/97 data set against all steering cards would take about 20 hours!

- Create a GitHub release to include sample .rz files. Hopefully to allow anyone to run this!

- Try and build the 64bit version of CERNLIB so I don't have to run on a 32bit VM.